While the importance of sound in the UI/UX domain is growing, compared to research and practice related to appearance and movement, sound has not been sufficiently discussed, partly because the number of sound designers in this domain is still small. Therefore, we have developed UI sound assets that are available free of charge so that UI/UX developers can easily explore interaction design with sound. We also provide a Javascript library to make it relatively easy for non-programmers to implement sound assets.

https://snd.dev/

Necomimi is the new communication tool that augments the human bodies and abilities. This cat’s ear shaped machine utilizes brainwaves and expresses your emotional state before you start talking. Just put on Necomimi and if you are concentrating, this cat’s ear shaped machine will rise. When you are relaxed, your new ears lie down. If you are concentrating and relaxing at the same time, your new ears will rise and actively move. In general, professional sports players demonstrate this ability the most.

https://dentsulab.tokyo/works/necomimi

A video and promotional website for APIA's new brand of fishing gear, GRANDAGE. With Taisuke, a breakdancer performing nationally and internationally, as a guest, we made a promotional video with music made of samples of fishing sounds created by Juicy from HIFANA. The website features a sampler and sequencer using fishing sounds and video.

https://www.apiajapan.com/grand/s/tage/

Live camera-based entertainment has been used at many sporting events, but the "kiss cam" and "dance cam" performances that are popular at overseas events are too difficult for shy Japanese people to participate in. To solve this problem, we developed a game system that uses machine learning technology to detect the sound and movement of audience applause, thereby enabling all audience members to participate. We developed an interactive system that is easy for Japanese people to participate in, and that gets the entire audience excited.

An interactive music player that brings back the experience of "facing the music". This music player is a completely new concept that measures the user's brain waves and plays music only when the user is concentrating on the music. Music is played when the user concentrates on the music, and the music stops when the user is spoken to or thinks about something else, so that subjects naturally condition themselves to "listen with their whole body" in order to hear the music.

https://neurowear.com/neuroturntable/

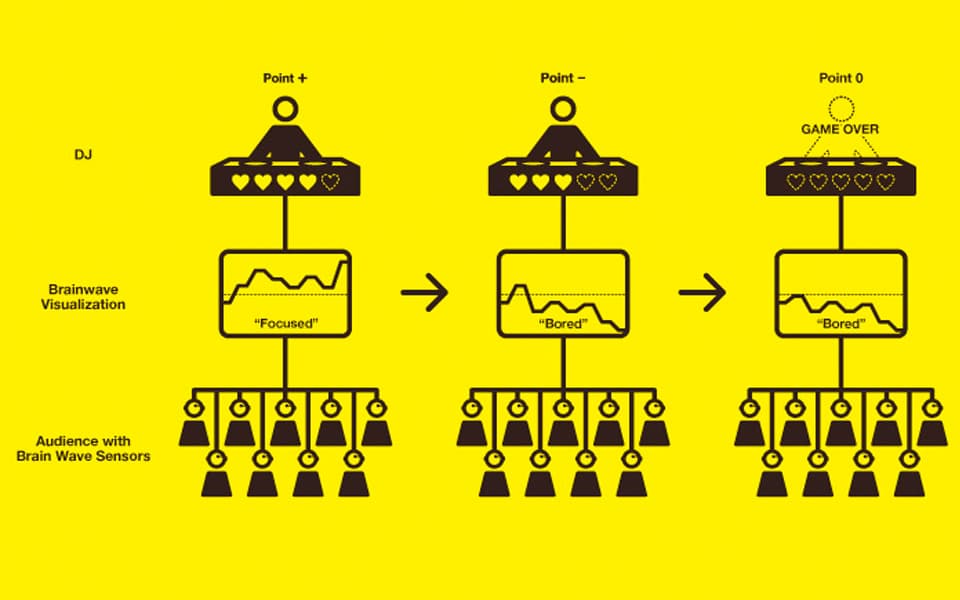

Teamed up with Qosmo, developed “Brain Disco”, where DJ must maintain audiences’ “attention”, measured with brainwave sensors, in order to keep DJing. If the average attention value remains less than a given threshold, DJ gets kicked out.

https://neurowear.com/braindisco/

"mico" is a system that selects music that fits the user's brainwave state simply by wearing the device. The system analyzes brain waves and automatically plays music that fits the brain wave state selected by mico algorithm. mico was first unveiled at SXSW 2014. The music selection algorithm was jointly developed by Associate Professor Yasue Mitsukura and Researcher Mikito Ogino of the Mitsukura Laboratory at Keio University, utilizing 15 years of EEG measurement data.

https://neurowear.com/mico/

A visual sequencer used on a wall-floor display was developed for the entrance of "SUMMER SONIC 2022 TOKYO" held on August 20 and 21, 2022. The sound of a musical instrument is assigned to each person and organic gradients are generated beneath their feet. The gradients appear and disappear along with the assigned instruments beating in rhythm with the base background music. It is as if you have become a part of the music.

https://vimeo.com/818967050

A special exhibition for “Capturing Sounds” held at the Kyoto Okazaki Music Festival 2017 OKAZAKI LOOPS on June 10 and 11, 2017. A space with nothing but light and sound. In a room with a laminated mirror using LED displays and a half-mirror, light and music are emerging endlessly, giving visitors the sensation of entering "inside" the sound and light.

https://vimeo.com/222513940

When a person speaks into the microphone, the waveform of the sound is analyzed and fish of various colors and shapes begin to swim in the aquarium. The shape of the fish changes depending on the volume and length of the voice, as well as the combination of high and low pitch, so that many different kinds of fish are created by each individual voice. Furthermore, when you touch the created fish, the fish will speak the words that was spoken into the microphone.

https://vimeo.com/214811316